MPEG

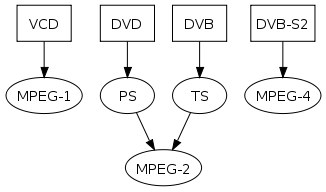

The MPEG standard was developed by ISO/IEC JTC1/SC 29/WG11 to cover motion video as well as audio coding according to the ISO/IEC standardization process. Considering the state of the art in CD-technology digital mass storage, MPEG strives for a data stream compression rate of about 1.2 Mbits/second, which is today’s typical CD-ROM data transfer rate. MPEG can deliver a data rate of at most 1856000 bits/second, which should not be exceeded. Data rates for audio are between 32 and 448 Kbits/second; this date rate enables video and audio compression of acceptable quality. In 1993, MPEG was accepted as the International Standard (IS) and the first commercially available MPEG products entered the market.

The MPEG standard was developed by ISO/IEC JTC1/SC 29/WG11 to cover motion video as well as audio coding according to the ISO/IEC standardization process. Considering the state of the art in CD-technology digital mass storage, MPEG strives for a data stream compression rate of about 1.2 Mbits/second, which is today’s typical CD-ROM data transfer rate. MPEG can deliver a data rate of at most 1856000 bits/second, which should not be exceeded. Data rates for audio are between 32 and 448 Kbits/second; this date rate enables video and audio compression of acceptable quality. In 1993, MPEG was accepted as the International Standard (IS) and the first commercially available MPEG products entered the market.

Video Encoding

In contrast to JPEG, the image preparation phase of MPEG exactly defines the format of an image. Each image consists of three components; the luminance component has twice as many samples in the horizontal and vertical axes as the other two components - this is known as color-sub sampling. The resolution of the luminance component should not exceed 768 x 576 pixels; for each component, a pixel is coded with eight bits.

Due to the required frame rate, each image must be built up within a maximum of 41.7 milliseconds. MPEG distinguishes four types of image coding for processing. The reasons behind this are the contradictory demands for an efficient coding scheme and fast random access. The following types of images are distinguished (image is used as a synonym for still image or frame):

I-Frames (Intra-coded images) are self contained, i.e., coded without any reference to other images. An I-frame is treated as a still image. MPEG makes use of JPEG for I-frames. However, contrary to JPEG, compression must often be executed in real-time. The compression rate of I-frames is the lowest within MPEG. I-frames are points for random access in MPEG streams.

P-frames (Predictive-coded frames) require information of the previous I-frame and / or all previous P-frames for encoding and decoding. The coding of P-frames is based on the fact that, bu successive images, their areas often do not change at all but instead, the whole area is shifted. In this case of temporal redundancy, the block of the last P or I-frame that is most similar to the block under consideration is determined. Several methods for motion estimation are available to the encoder. Several matching criteria are available, e.g., the differences of all absolute values of the luminance component are computed. The minimal number of the sum of all differences indicates the best matching macro block. Thereby, MPEG does not provide a certain algorithm for motion estimation, but instead specifies the coding of the result.

B-frames (Bi-directionally predictive-coded frames) require information of the previous and following I and / or P-frame for encoding and decoding. The highest compression ratio is attainable by using these frames. A B-frame is defined as the difference of a prediction of the past image and the following P or I-frame. B-frames can never be directly accessed in a random fashion. For the prediction of B-frames, the previous as well as the following P or I-frames are taken into account. The following example illustrates the advantages of a bi-directional prediction. In a video scene, a ball moves from left to right in front of a static background. In the left area of the scene, parts of the image appear that in the former image were covered by the ball. A prediction of these areas can be derived from the following but not from the previous image. A macro block may be derived from the previous or the next macro block of P or I-frames.

D-Frames (DC-Coded frames) are intraframe-encoded. They can be used for fast forward or fast rewind modes. D-frames consist only of the lowest frequencies of an image. D-frames are used for display in fast-forward or fast-rewind modes. This could also be realized by a suitable order of I-frames. For this purpose, I-frames must occur periodically in data stream. Slow-rewind playback requires huge storage capacity. Therefore, all images that were combined in a group must be decoded in the forward mode and stored, after which a rewind playback is possible. This is known as the group of pictures in MPEG.

The picture shows a sequence of I, P, and B-frames. For example, the prediction for the first P-frames and a bi-directional prediction for a B-frame is shown. A P-frame to be displayed after the related B-frame must be decoded before the B-frame because its data is required for the decompression of the B-frame. The regularity of a sequence of I, P and B-frames is determined by the MPEG application. For fast random access, the best resolution would be achieved by coding the whole data stream as I-frames. On the other hand, the highest degree of compression is attained by using as many B-frames as possible. For practical applications, the following sequence has proved to be useful, “IBBPBBPBBIBBPBBPBB...”. In this case, random access would have a resolution of nine still images(i.e., about 330 milliseconds), and it still provides a very good compression ratio.

Audio Encoding

![]() MPEG audio coding uses the same sampling frequencies as Compact Disc Digital Audio (CD-DA) and Digital Audio Tape (DAT), i.e., 44.1 kHz and 48 kHz, and additionally, 32 kHz is available, all at 16 bits. Three different layers of encoder and decoder complexity and performance are defined. An implementation of a higher layer must be able to decode the MPEG audio signals of lower layers. For each subband, the amplitude of the audio signal is calculated. Also for each subband, the noise level is determined. At a higher noise level, a rough quantization is performed, and at a lower noise level, a finer quantization is applied.The audio coding can be performed with a single channel, two independent channels or one stereo signal. In the definition of MPEG, there are two different stereo modes: two channels that are processed either independently or as joint stereo. In the case of joint stereo, MPEG exploits redundancy of both channels and achieves a higher compression ratio. Each layer defines 14 fixed bit rates for the encoded audio data stream, which in MPEG are addressed by a bit rate index. The minimal value is always 32 Kbits/second. These layers support different maximal bit rates: layer 1 allows for a maximal bit rate of 448 Kbits/second, layer 2 for 384 Kbits/second and layer 3 for 320 Kbits/s.

MPEG audio coding uses the same sampling frequencies as Compact Disc Digital Audio (CD-DA) and Digital Audio Tape (DAT), i.e., 44.1 kHz and 48 kHz, and additionally, 32 kHz is available, all at 16 bits. Three different layers of encoder and decoder complexity and performance are defined. An implementation of a higher layer must be able to decode the MPEG audio signals of lower layers. For each subband, the amplitude of the audio signal is calculated. Also for each subband, the noise level is determined. At a higher noise level, a rough quantization is performed, and at a lower noise level, a finer quantization is applied.The audio coding can be performed with a single channel, two independent channels or one stereo signal. In the definition of MPEG, there are two different stereo modes: two channels that are processed either independently or as joint stereo. In the case of joint stereo, MPEG exploits redundancy of both channels and achieves a higher compression ratio. Each layer defines 14 fixed bit rates for the encoded audio data stream, which in MPEG are addressed by a bit rate index. The minimal value is always 32 Kbits/second. These layers support different maximal bit rates: layer 1 allows for a maximal bit rate of 448 Kbits/second, layer 2 for 384 Kbits/second and layer 3 for 320 Kbits/s.